Diffusion model training- Dreambooth

Diffusion model training - Dreambooth

The Orbofi factories ( AI models), factory training, are powered by Dreambooth, initially released by Google research to train diffuson models

Note: Orbofi was not part of this research and did not contribute to its R&D. Orbofi leverages this framework, and links it for reference and transparency

DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation

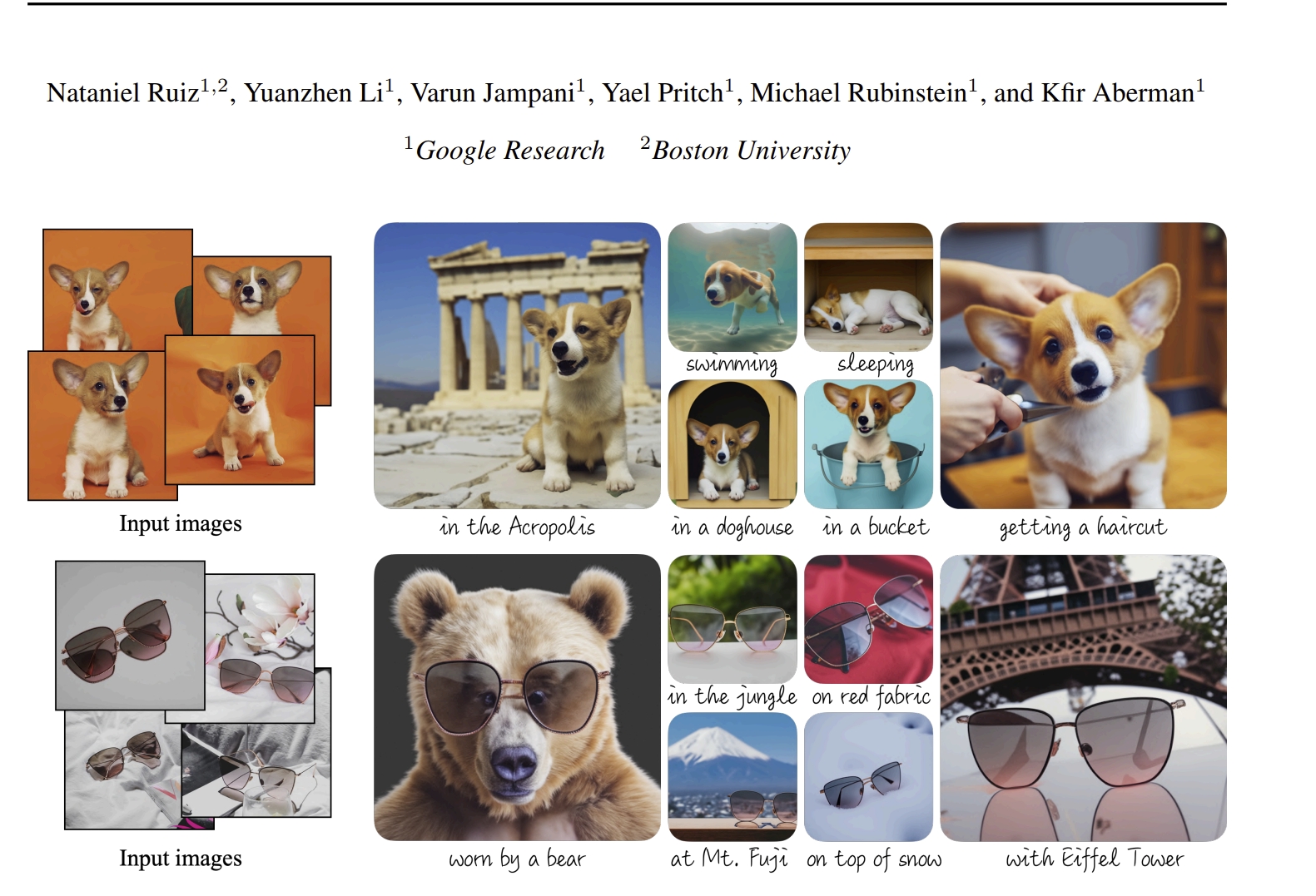

Abstract Large text-to-image models achieved a remarkable leap in the evolution of AI, enabling high-quality and diverse synthesis of images from a given text prompt. However, these models lack the ability to mimic the appearance of subjects in a given reference set and synthesize novel renditions of them in different contexts. In this work, we present a new approach for “personalization” of text-to-image diffusion models (specializing them to users’ needs). Given as input just a few images of a subject, we fine-tune a pretrained text-toimage model (Imagen, although our method is not limited to a specific model) such that it learns to bind a unique identifier with that specific subject. Once the subject is embedded in the output domain of the model, the unique identifier can then be used to synthesize fully-novel photorealistic images of the subject contextualized in different scenes.By leveraging the semantic prior embedded in the model with a new autogenous class-specific prior preservation loss, our technique enables synthesizing the subject in diverse scenes, poses, views, and lighting conditions that do not appear in the reference images. We apply our technique to several previously-unassailable tasks, including subject recontextualization, text-guided view synthesis, appearance modification, and artistic rendering (all while preserving the subject’s key features). Project page: https://dreambooth.github.io/1 IntroductionCan you imagine your own dog traveling around the world, or your favorite bag displayed in the most exclusive showroom in Paris? What about your parrot being the main character of an illustrated storybook? Rendering such imaginary scenes is a challenging task that requires synthesizing instances of specific subjects (objects, animals, etc.) in new contexts such that they naturally and seamlessly blend into the scene. Recently developed large text-to-image models achieve a remarkable leap in the evolution of AI, by enabling high-quality and diverse synthesis of images based on a text prompt written in natural language [56, 51]. One of the main advantages of such models is the strong semantic prior learned from a large collection of image-caption pairs. Such a prior learns, for instance, to bind the word “dog” with various instances of dogs that can appear in different poses and contexts in an image. While the synthesis capabilities of these models are unprecedented, they lack the ability to mimic the appearance of subjects in a given reference set, and synthesize novel renditions of those same subjects in different contexts.

Last updated